This article discusses the threat of asteroids and other near-earth objects (NEOs) to humanity. The article points out how it is relatively easy to detect large asteroids before they hit rather than stopping nuclear war or climate change.

The article begins as follows:

Ed Lu wants to save the world. In a talk Thursday at the University of Hawai‘i, the former astronaut recalled looking at the moon while he was aboard the International Space Station. Its craters were dramatic visual evidence of the number and size of the asteroid impacts the moon had sustained. Lu knew that the more massive Earth is hit by asteroids even more often than the moon, although the evidence of those collisions is obscured by water, weather, and vegetation.

We know with a fair amount of confidence how often asteroids hit the earth. In the next 100 years there’s about a 30% chance that the planet will be hit by a “city-killer” asteroid like the one that exploded over the remote Tunguska River region of central Russia in 1908. That asteroid exploded with a force about 500 times greater than the nuclear bomb dropped on Hiroshima. If an asteroid that size were to hit a city or a densely-inhabited area, it would kill millions of people. There’s about a 1% chance in the next 100 years that the planet will be hit by an asteroid 20 times the mass of the Tunguska asteroid. An asteroid that size would hit with about five times the explosive force of all the bombs—nuclear weapons included—used in World War II. And there’s a 0.001% chance that in the next 100 years we will be hit by an asteroid large enough to wipe out human civilization entirely.

“The odds of a space-object strike during your lifetime may be no more than the odds you will die in a plane crash,” Nathan Myhrvold memorably said. “But with space rocks, it’s like the entire human race is riding on the plane.” A thousandth of a percent is not by itself a very large chance. But it’s still high for something that could mean the extinction of the human race and destruction of everything we know and love. As a species, we are—whether we’re aware of it or not—essentially playing a game of Russian roulette. Our number probably won’t come up this century or even the next century. But, as Lu said, in the long run “you can’t beat the house.”

The remainder of the article is available in Anthropocene blog.

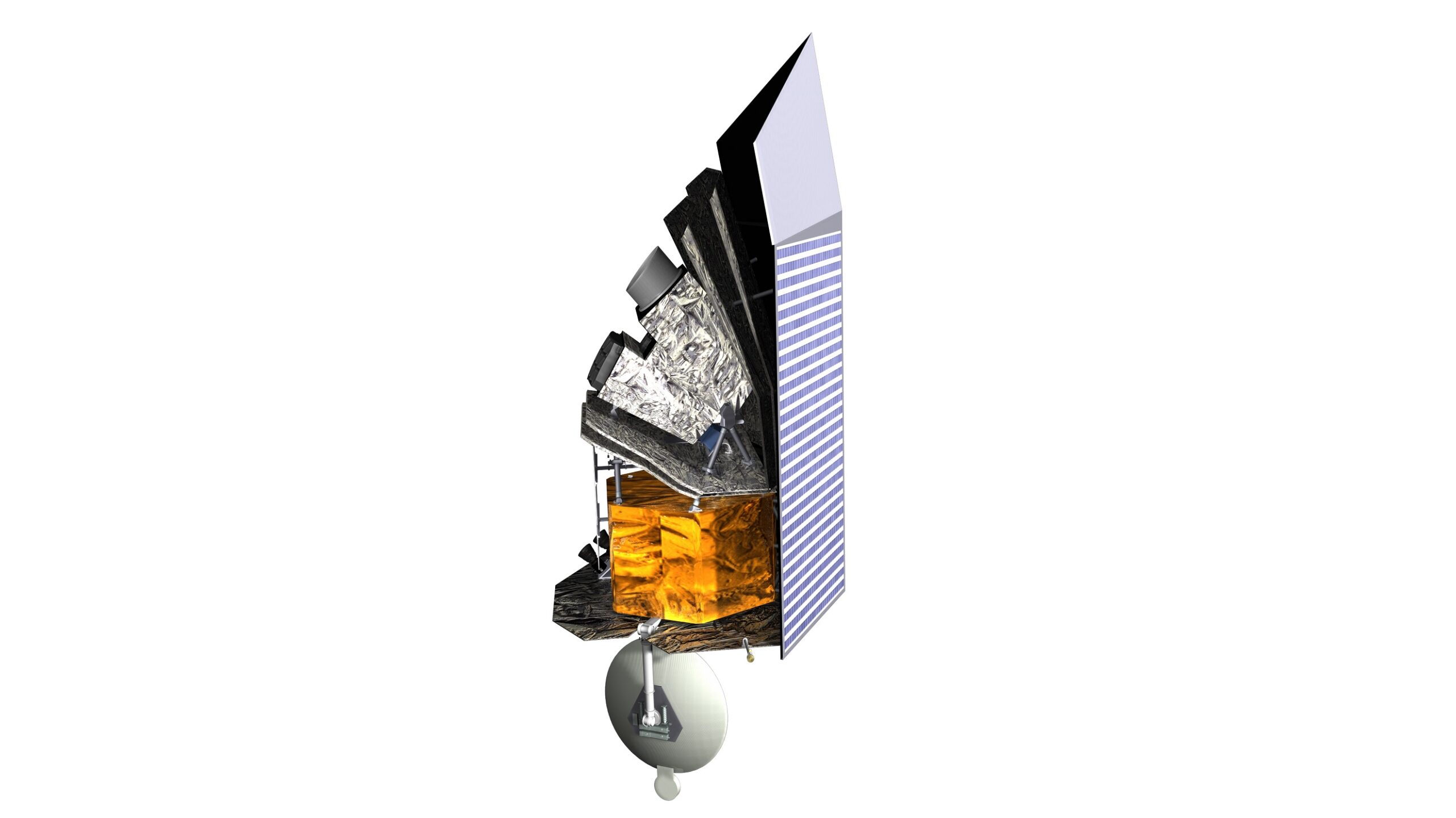

Image credit: B612Julie