View in the Bulletin of Atomic Scientists

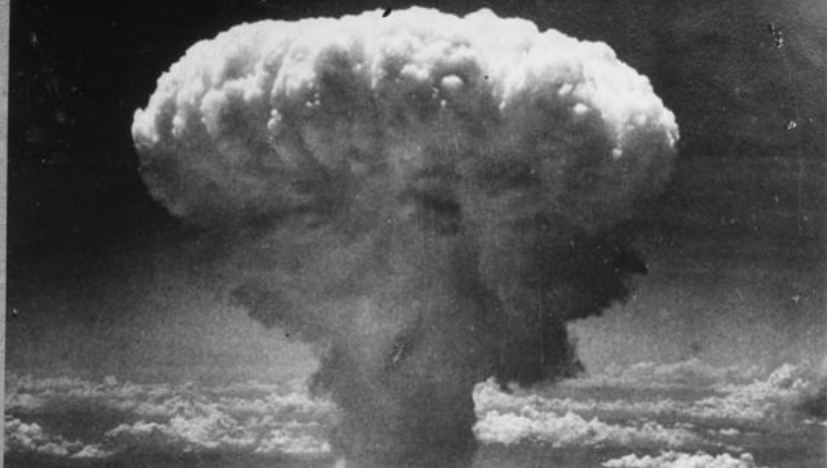

This article discusses tradeoffs on the use of biological weapons in the face of greater threats such as global catastrophes.

The article begins as follows:

The status quo of large nuclear arsenals risks global catastrophe so severe that human civilization may never recover. If we care about the survival of human civilization, then we should seek solutions to make sure such a catastrophe never occurs. This is my starting point for exploring the potential for winter-safe deterrence. Perhaps, upon closer inspection, winter-safe deterrence will prove infeasible. But I do believe it is worth closer inspection. To that effect, I welcome this roundtable discussion and the broader conversation that my research has sparked.

I have defined winter-safe deterrence as military force capable of meeting the deterrence goals of today’s nuclear-armed states without risking catastrophic nuclear winter. Winter-safe deterrence recognizes two basic issues: first, large nuclear arsenals pose a devastating catastrophic risk; and second, nuclear-armed states may refuse to relinquish almost the entirety of their nuclear arsenals unless their deterrence goals are met through other means. Winter-safe deterrence thus aims to make the world safer given the politics of deterrence.

Personally, I would prefer that today’s nuclear-armed states simply decide that they no longer need deterrence based on the threat of massive destruction. Such destruction is immoral and against the spirit of international humanitarian law. To that effect, we should seek solutions that reduce the demand for this sort of deterrence, for example by improving relations between nuclear-armed states or by stigmatizing this deterrence. The ongoing initiative on the humanitarian impacts of nuclear weapons is enhancing precisely this stigma. I am proud to support the initiative. It is a first-best solution to nuclear war risk.

The remainder of the article is available in the Bulletin of Atomic Scientists.

Image credit: United States Office of War Information

This blog post was published on 28 July 2020 as part of a website overhaul and backdated to reflect the time of the publication of the work referenced here.