View in the Bulletin of Atomic Scientists

This article discusses how nuclear winter can likely be minimized despite deterrence efforts.

The article begins as follows:

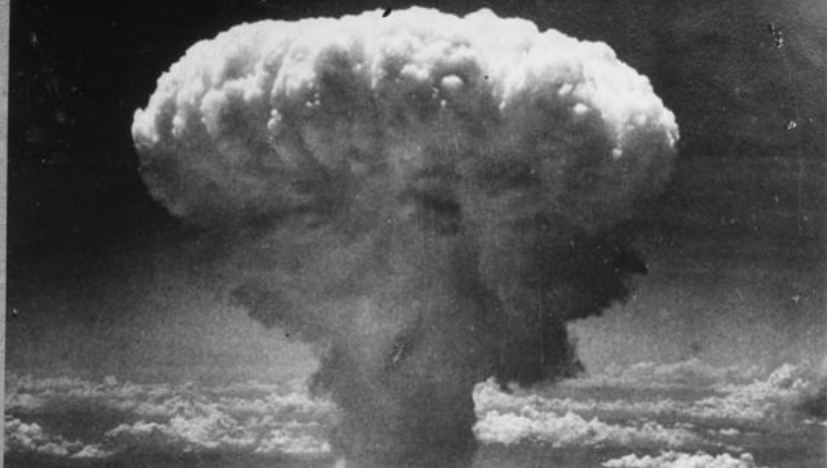

The biggest danger posed by today’s large nuclear arsenals is nuclear winter. One or two nuclear strikes could wreak devastating destruction on a few regions, but would not destroy human civilization as a whole. The roughly 16,300 nuclear weapons that currently exist, though, are more than enough to cause nuclear winter, which, through extreme cold conditions, ultraviolet radiation, and crop failures, could threaten the whole of humanity. If we fail to avoid nuclear winter, we could all die, or we could see civilization collapse, never to return.

That makes avoiding nuclear winter paramount. But the world’s major powers, in particular the United States and Russia, have long argued that their large nuclear arsenals are required for deterrence. Deterrence means threatening another party with some sort of harm in order to persuade it not to do something. In this case, it means threatening massive nuclear retaliation to dissuade another country from launching an attack itself. If two countries were to follow through on their threats of nuclear retaliation, mutual destruction would be assured. That deters both sides from starting a war. But nuclear deterrence can fail, as demonstrated during events like the Cuban missile crisis, when there have been escalations towards nuclear war. (Martin Hellman, Ward Wilson, and others have documented such events.)

As things stand now, a failure of deterrence could result in nuclear winter. It may be possible, though, for the world’s biggest nuclear powers to meet their deterrence needs without keeping the large nuclear arsenals they maintain today. They could practice a winter-safe deterrence, which would rely on weapons that pose no significant risk of nuclear winter.

The remainder of the article is available in the Bulletin of Atomic Scientists.

Image credit: United States Office of War Information

This blog post was published on 28 July 2020 as part of a website overhaul and backdated to reflect the time of the publication of the work referenced here.