Eight countries have large nuclear arsenals: China, France, India, Israel, Pakistan, Russia, the United Kingdom, and the United States. North Korea might have a small nuclear arsenal. These countries have nuclear weapons for several reasons. Perhaps the biggest reason is deterrence. Nuclear deterrence means threatening other countries with nuclear weapons in order to persuade them not to attack. When nuclear deterrence works, it can help avoid nuclear war. However, nuclear deterrence does not work perfectly, leaving a nonzero risk of nuclear war.

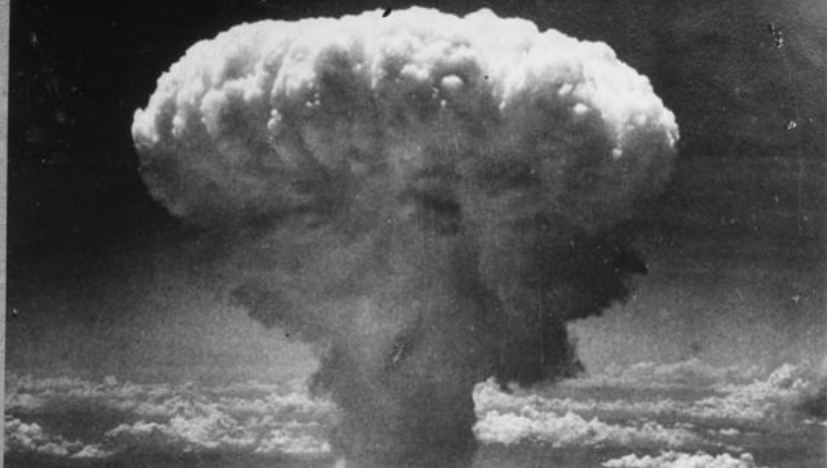

Background: nuclear winter risk. Perhaps the biggest consequence of nuclear war is nuclear winter. Nuclear winter is caused by smoke from nuclear explosions going high into the atmosphere, where it spreads around the world. The smoke blocks incoming sunlight, cooling the surface and reducing precipitation. These extreme environmental conditions can cause health problems, famines, and other harms. Nuclear winter may even be able to cause the collapse of human civilization or human extinction.

Winter-safe deterrence. A common policy response to nuclear winter risk is to call for nuclear disarmament. However, nuclear-armed countries do not want to disarm. They want their nuclear weapons, in particular for deterrence. This paper introduces the concept “winter-safe deterrence”, defined as military force capable of meeting the deterrence goals of today’s nuclear-armed countries without risking catastrophic nuclear winter. Nuclear-armed countries may be receptive to winter-safe deterrence because it does not require them to sacrifice their deterrence goals. If countries choose winter-safe deterrence, then the world will be safe from nuclear winter.

Winter-safe nuclear arsenal limits. There is uncertainty in the total human impacts of nuclear winter. What can be said is that a larger nuclear war has a higher probability of causing a major global catastrophe from nuclear winter. It is important to keep the probability of global catastrophe low. Therefore, this paper proposes a winter-safe limit of 50 total nuclear weapons worldwide. This limit reflects the current state of knowledge about nuclear winter, and could be changed pending future research.

Military options for winter-safe deterrence. Countries pursuing winter-safe deterrence have a variety of options. One option is deterrence with small nuclear arsenals consistent with the 50 weapon limit. Other options include conventional military force, conventional prompt global strike, neutron bombs, biological and chemical weapons, cyber weapons, and electromagnetic weapons. None of these weapons offers the same destructive potential as a large nuclear arsenal. However, some could make for effective deterrents. This paper conducts technical analysis of the deterrence potential of these weapons. The paper tentatively finds that winter-safe deterrence is feasible. Given the dangers of nuclear winter, winter-safe deterrence is also desirable.

Note: This paper was one of five articles chosen for the shortlist for the 2016 Bernard Brodie Prize for the best article in Contemporary Security Policy over the previous year. It also sparked extensive debate about the concept of winter-safe deterrence and the article’s discussion of non-contagious biological weapons. See also Winter-safe deterrence as a practical contribution to reducing nuclear winter risk: A reply and GCRI’s work on nuclear war.

Academic citation:

Seth D. Baum, 2015. Winter-safe deterrence: The risk of nuclear winter and its challenge to deterrence. Contemporary Security Policy, vol. 36, no. 1 (April), pages 123-148, DOI 10.1080/13523260.2015.1012346.

Download Preprint PDF • View in Contemporary Security Policy

Image credit: United States Office of War Information

This blog post was published on 28 July 2020 as part of a website overhaul and backdated to reflect the time of the publication of the work referenced here.