Asteroid collision is probably the most well-understood global catastrophic risk. This paper shows that it’s not so well understood after all, due to uncertainty in the human consequences. This finding matters both for asteroid risk and for the wider study of global catastrophic risk. If asteroid risk is not well understood, then neither are other risks such as nuclear war and pandemics.

In addition to our understanding of the risks, two other important issues are at stake. One is policy for asteroids (and likewise for other risks). The paper argues that greater uncertainty about the human consequences demands more aggressive asteroid risk reduction, in order to err on the safe side of avoiding catastrophe. Also at stake are which risks to prioritize, including extinction risks vs. catastrophes that leave survivors. Uncertainty in the fate of survivors can be a reason to prioritize sub-extinction risks similarly to extinction risks. This issue is also discussed in the recent paper Long-Term Trajectories of Human Civilization.

The paper includes some discussion of the prospect of nuclear war triggered by asteroid collisions. Asteroid collisions produce explosions that could be mistaken for nuclear attack. Many similar nuclear war false alarms have occurred, as documented in the GCRI paper A Model for the Probability of Nuclear War. The prospect of asteroid collision false alarm was in the news recently due to an asteroid explosion near the US Air Force’s Thule Air Base in Greenland. This issue has important policy implications for both asteroid and military communities, as is discussed in the paper.

Academic citation:

Baum, Seth D., 2018. Uncertain human consequences in asteroid risk analysis and the global catastrophe threshold. Natural Hazards, DOI 10.1007/s11069-018-3419-4.

Download Preprint PDF • View in Natural Hazards • View in ReadCube

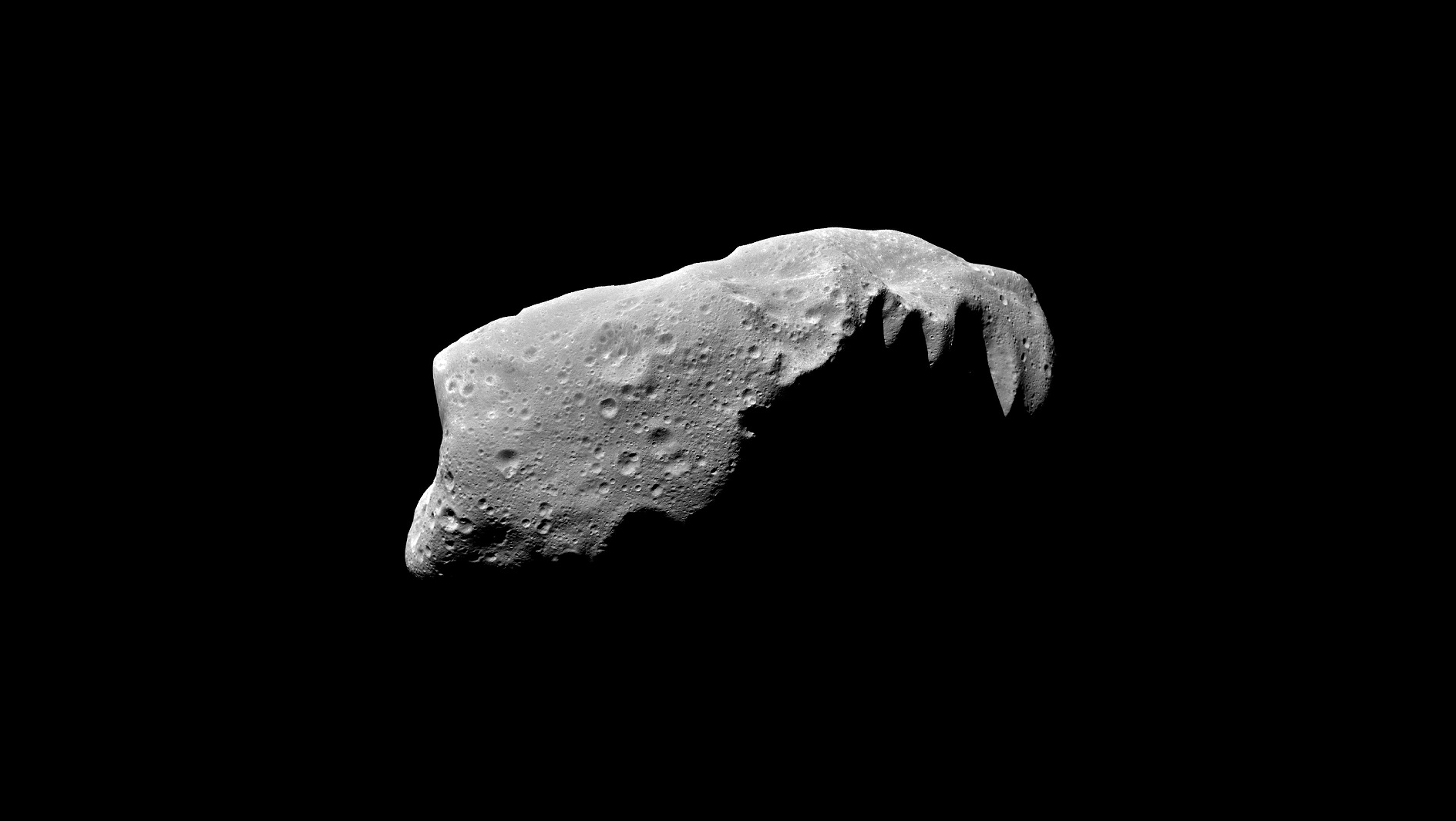

Image credit: NASA/JPL