A downside dilemma is any decision in which one option promises benefits but comes with a risk of significant harm. An example is the game of Russian roulette. The decision is whether to play. Choosing to play promises benefits but comes with the risk of death. This paper introduces the great downside dilemma as any decision in which one option promises great benefits to humanity but comes with a risk of human civilization being destroyed. This dilemma is great because the stakes are so high—indeed, they are astronomically high. The great downside dilemma is especially common with emerging technologies.

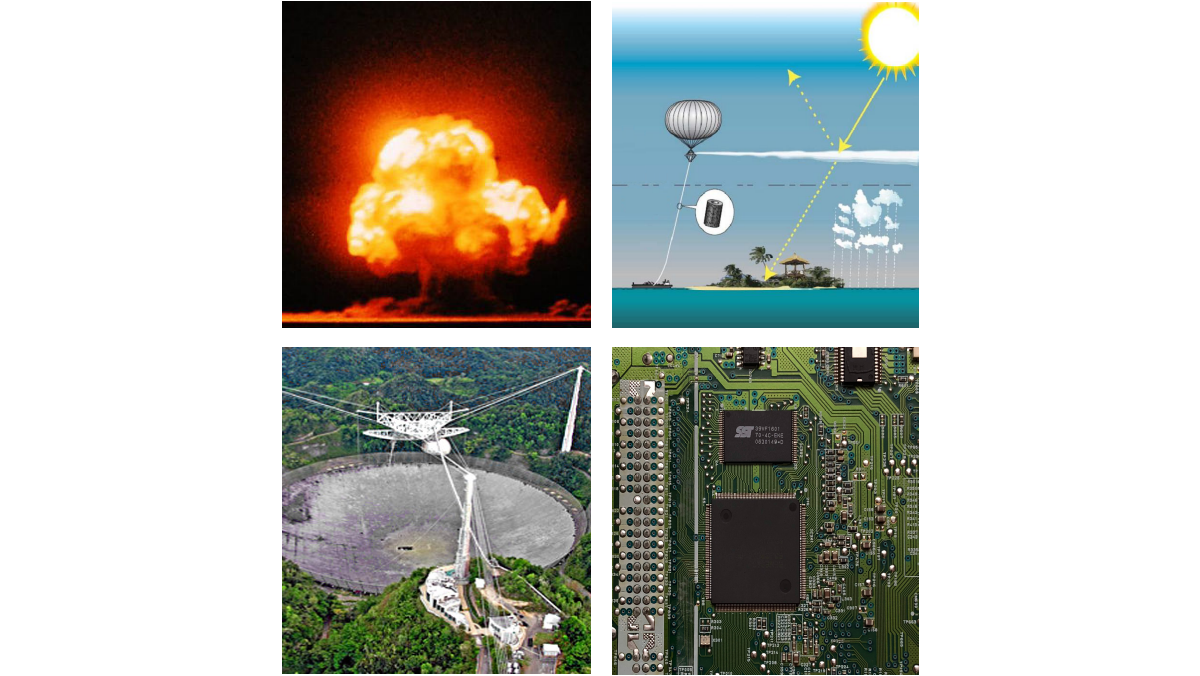

Historical precedents: nuclear weapons and messaging to extraterrestrial intelligence. The great downside dilemma for emerging technologies has been faced at least twice before. The first precedent is nuclear weapons. It came in the desperate circumstances of World War II: the decision of whether to test detonate the first nuclear weapon. Some physicists suspected that the detonation could ignite the atmosphere, killing everyone on Earth. Fortunately, they understood the physics well enough to correctly figure out that the ignition wouldn’t happen. The second precedent is messaging to extraterrestrial intelligence (METI). The decision was whether to send messages. While some messages have been sent, METI is of note because the dilemma still has not been resolved. Humanity still does not know if METI is safe. Thus METI decisions today face the same basic dilemma as the initial decisions in decades past.

Dilemmas in the making: stratospheric geoengineering and artificial general intelligence. Several new instances of the great downside dilemma lurk on the horizon. The stakes for these new dilemmas are even higher, because they come with much higher probabilities of catastrophe. This paper discusses two. The first is stratospheric geoengineering, which promises to avoid the most catastrophic effects of global warming. However, stratospheric geoengineering could fail, bringing an even more severe catastrophe. The second is artificial general intelligence, which could either solve a great many of humanity’s problems or kill everyone, depending on how it is designed. Neither of these two technologies currently exists, but both are subjects of active research and development. Understanding these technologies and the dilemmas they pose is already important, and it will only get more important as the technologies progress.

Technologies that don’t pose the great downside dilemma. Not all technologies present a great downside dilemma. These technologies may have downsides, but they do not threaten significant catastrophic harm to human civilization. Some of these technologies even hold great potential to improve the human condition, including by reducing other catastrophic risks. These latter technologies are especially attractive and in general should be pursued. The paper discusses three such technologies: sustainable design technology, nuclear fusion power, and space colonization technology. Some sustainable design is quite affordable, including the humble bicycle, while nuclear fusion and space colonization are quite expensive. However, all of these technologies can play a helpful role in improving the human condition and avoiding catastrophe.

Academic citation:

Seth D. Baum, 2014. The great downside dilemma for risky emerging technologies. Physica Scripta, vol. 89, no. 12 (December), article 128004, DOI 10.1088/0031-8949/89/12/128004.

Image credits:

Nuclear explosion: United States Department of Energy

Arecibo Observatory: JidoBG

Geoengineering: Hugh Hunt

Computer chip: Aler Kiv

This blog post was published on 28 July 2020 as part of a website overhaul and backdated to reflect the time of the publication of the work referenced here.