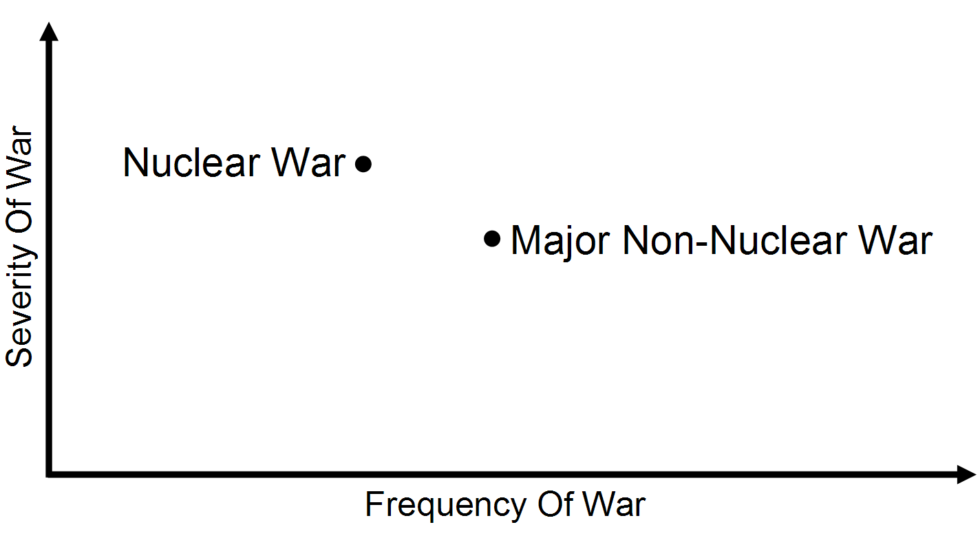

Would the world be safer with or without nuclear weapons? On one hand, nuclear weapons may increase the severity of war due to their extreme explosive power. On the other hand, they may decrease the frequency of major wars by strengthening deterrence. Is the decrease in frequency enough to offset the increase in severity? (This tradeoff is illustrated in the graphic above.) This is a vital policy question for which risk analysis has a central role to play. Exactly what that role is, and what it should be, is the focus of a new paper by GCRI published in conjunction with the UCLA Garrick Institute for the Risk Sciences.

The paper surveys the state of nuclear war risk analysis and examines its role in policy decision-making. It contains the most detailed and up-to-date review of the nuclear war risk literature currently available. The literature has many gaps, and is arguably not particularly extensive given the importance of the topic. Nonetheless, some meaningful progress is being made, despite nuclear war being a difficult risk to analyze.

While more nuclear war risk research would be helpful, the paper sees a bigger challenge in the link between research and policymaking. The research literature often proceeds as if nuclear war policy is set based on careful risk analysis. However, actual nuclear war policy tends not to use much risk analysis. Indeed, the lack of nuclear war policy interest in risk analysis may be one reason why there has been so little nuclear war risk research. While interested scholars could continue to produce more research, it may all be for nothing if policymakers ignore it.

The paper proposes two solutions to address the gap between nuclear war risk analysis and policy. First, nuclear war risk researchers and their allies can advocate for a risk perspective to policymakers. The advocacy would explain to policymakers why a risk perspective is valuable to important policy questions like whether the world is safer with or without nuclear weapons. Second, researchers can translate the findings of risk research into active policy debates. The translation makes it easier for policymakers to use the risk research and thus more likely that they will do so.

This paper is part of GCRI’s ongoing work on nuclear war and risk & decision analysis. It draws on GCRI’s lengthy experience applying risk analysis to nuclear war. It also touches on themes of relevance to the analysis of other global catastrophic risks. Many—perhaps all—of the global catastrophic risks are difficult but important to analyze, and face similar challenges of whether risk analysis gets used in major decisions.

The paper is published in the Proceedings of the One Day Workshop on Quantifying Global Catastrophic Risks, which was hosted last year by the B. John Garrick Institute for the Risk Sciences at the University of California Los Angeles. The Garrick Institute and GCRI are perhaps the two leading groups applying risk analysis to global catastrophic risk. (Note: John Garrick is a GCRI Senior Advisor.)

Academic citation:

Seth D. Baum, 2018. Reflections on the risk analysis of nuclear war. In B. John Garrick (editor), Proceedings of the Workshop on Quantifying Global Catastrophic Risks, Garrick Institute for the Risk Sciences, University of California, Los Angeles, pages 19-50.

Download Preprint PDF • View Proceedings of the Workshop on Quantifying Global Catastrophic Risks

Image credit: Seth Baum