View in Journal of Evolution and Technology

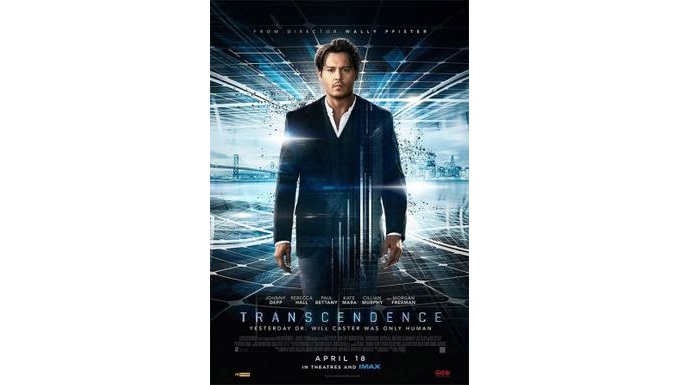

Is it possible to create an artificial mind? Can a human or other biological mind be uploaded into computer hardware? Should these sorts of artificial intelligences be created, and under what circumstances? Would the AIs make the world better off? These and other deep but timely questions are raised by the recent film Transcendence(dir. Wally Pfister, 2014). In this review, I will discuss some of the questions raised by the film and show their importance to real-world decision making about AI and other risks. Reader, be warned: This review gives away much of the film’s plot, though it also suggests ideas to keep in mind when watching.

Academic citation:

Seth D. Baum, 2014. Film review: Transcendence. Journal of Evolution and Technology, vol. 24, no. 2 (September), pages 79-84.

View in Journal of Evolution and Technology • Download PDF from Journal of Evolution and Technology

Image credit: Warner Bros.

This blog post was published on 28 July 2020 as part of a website overhaul and backdated to reflect the time of the publication of the work referenced here.