Artificial intelligence is increasingly a factor in a range of global catastrophic risks. This paper studies the role of AI in two closely related domains. The first is systemic risks, meaning risks involving interconnected networks, such as supply chains and critical infrastructure systems. The second is environmental sustainability, meaning risks related to the natural environment’s ability to sustain human civilization on an ongoing basis. The paper is led by Victor Galaz, Deputy Director of the Stockholm Resilience Centre, in conjunction with the Global Systemic Risk group at Princeton University.

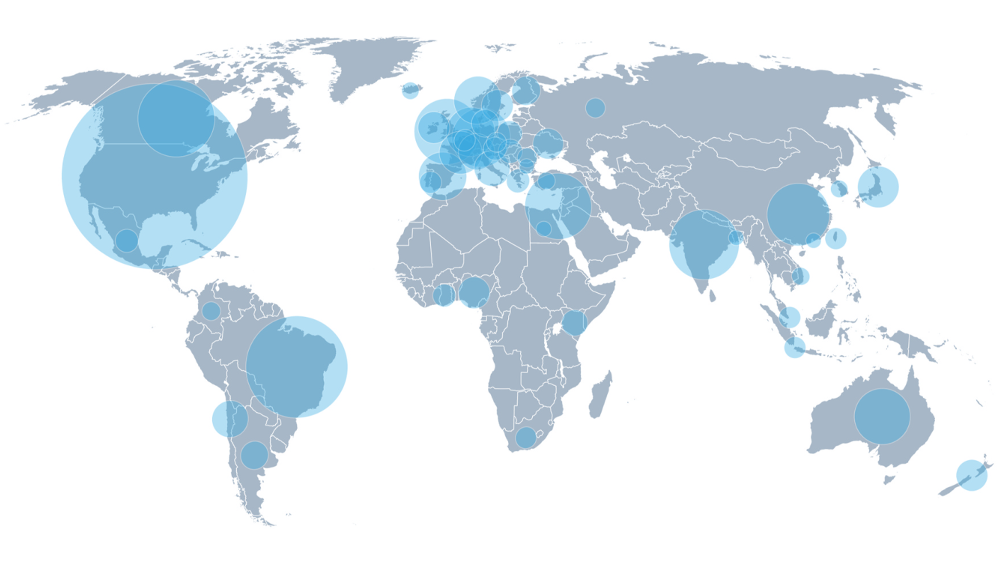

The paper compiles data on corporate investment in AI applications to sectors relevant to environmental sustainability, focusing on agriculture, aquaculture, and forestry. The data are from the Crunchbase investment database. The data indicate that the United States has by far the largest number of companies active in this space, as indicated in the graphic above. China has the largest monetary investment, almost 3.5 times larger than the US investment. Across all countries, the investment is primarily concentrated in agriculture, with smaller investments in aquaculture and forestry. The total global investment was $8.5 billion during 2007-2020.

AI is used to support data-driven precision agriculture and environmental management. Sensors collect a variety of environmental data that are used to train machine learning systems. The systems are then used to support agriculture and environmental management decisions. These systems can enable more precise optimization of variables of interest, such as crop yields. The benefits can be substantial, hence the significant ongoing investment in this space.

Unfortunately, as the paper documents, this use of AI also poses several risks. First, biases in the training data can result in flawed guidance, especially for settings in which sensor data are scarce. This problem is compounded by the fact that current AI systems struggle to learn from more conceptual and qualitative information, such as local knowledge built up by generations of farmers [1]. Second, these AI technologies increase the complexity and scope of social-technological-ecological systems, such as by concentrating environmental analysis in a small number of AI-driven corporations [2]. This increases the risk of “normal accidents” in which relatively small initial disturbances propagate across the system, resulting in large harms. Third, by further driving the digitization of agriculture and environmental management, and increasing the connectivity of digital systems, AI increases vulnerability to cyberattack. Concern about the cyberattack risk has already been raised, for example, by the US FBI and Department of Agriculture.

Addressing these risks will require interdisciplinary effort. However, thus far, these sorts of risks have not been a primary focus of work on AI. For example, the paper analyzes a collection of documents on AI ethics principles, finding that they tend to emphasize social issues instead of environmental issues [3]. Interdisciplinary collaborations, such as the one made for this paper, are essential activities.

This paper extends GCRI’s work on artificial intelligence and cross-risk evaluation and prioritization. We thank Victor Galaz, Miguel Centeno, and their colleagues at the Stockholm Resilience Centre and the Princeton Global Systemic Risk group for the opportunity to participate in this project.

Academic citation:

Victor Galaz, Miguel A. Centeno, Peter W. Callahan, Amar Causevic, Thayer Patterson, Irina Brass, Seth Baum, Darryl Farber, Joern Fischer, David Garcia, Timon McPhearson, Daniel Jimenez, Brian King, Paul Larcey, and Karen Levy, 2021. Artificial intelligence, systemic risks, and sustainability. Technology in Society, vol. 67 (November), article 101741, DOI 10.1016/j.techsoc.2021.101741.

Notes

[1] On the limitations of current AI systems, see also Deep learning and the sociology of human-level artificial intelligence.

[2] On the role of the corporate sector in AI and the opportunities to improve its practices, see Corporate governance of artificial intelligence in the public interest.

[3] A similar neglect of the natural environment, and of nonhumans more generally, is documented in Moral consideration of nonhumans in the ethics of artificial intelligence.

Image credit: J. Lokrantz/Azote, from Figure 1A of the paper