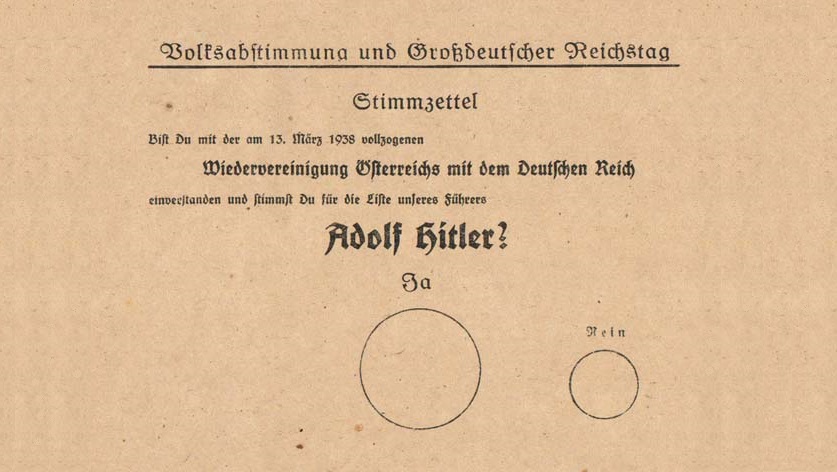

Vote suppression, disinformation, sham elections that give authoritarians the veneer of democracy, and even genocide: all of these are means of manipulating the outcomes of elections. (Shown above: a ballot from the sham 1938 referendum for the annexation of Austria by Nazi Germany; notice the larger circle for Ja/Yes.) Countering these manipulations is an ongoing challenge. Meanwhile, work on AI ethics often proposes that AI systems use something similar to democracy. Therefore, this paper explores the potential for AI systems to be manipulated and the corresponding implications for AI ethics and governance.

As in democracy, these AI systems would base decisions on some aggregate of societal values. Democracies aggregate values through voting in elections. AI systems could do the same or use other approaches. In ethics, economics, and political science, the process of aggregating individual values into group decisions is called social choice. AI research often uses other terminology, such as bottom-up ethics, coherent extrapolated volition, human compatibility, and value alignment. Examples include the Center for Human-Compatible AI and the AI Alignment Forum.

Prior GCRI research on AI social choice ethics has shown that there are many possible aggregates of societal values depending on three variables: (1) standing, meaning who or what is included as a member of society, (2) measurement, meaning how each individual’s values are obtained, and (3) aggregation, meaning how individual values are combined into a group decision.

This paper shows that standing, measurement, and aggregation can all be manipulated. Standing can be manipulated by excluding groups who hold opposing values. Measurement can be manipulated by designing the measurement process to achieve certain results, such as with loaded questions. Aggregation can be manipulated by systematically counting some individuals’ values more than others, such as through gerrymandering. These manipulations may all seem bad, but there can also be good manipulations. For example, the women’s suffrage movement manipulated democracies into giving women the right to vote.

By using these and other manipulations, AI system designers can rig the social choice process to achieve their preferred results. In doing so, they may be able to maintain the veneer of deferring to society’s values just as authoritarians do with sham elections. Furthermore, in addition to the system designers, other people may also be able to manipulate the process. They could pressuring the designers to do their bidding, as in the cybersecurity concept of social engineering. They could use adversarial input to bias machine learning algorithms. They could even seek to alter the form of society, such as through fertility policy or genocide.

The paper outlines a response to AI social choice manipulation using a mix of ethics and governance. Ethics is needed to evaluate which manipulations are good and which are bad so that the good ones can be promoted and the bad ones can be countered. The paper surveys existing concepts for the ethics of standing, measurement, and aggregation. Governance is then needed to put the ethics ideas into action. The paper cites prior GCRI research on AI corporate governance to illustrate some approaches to governing AI social choice manipulation. Finally, the paper considers that, given the challenge of manipulation, AI ethics could alternatively consider using something other than social choice.

The paper extends GCRI’s research on artificial intelligence.

Academic citation:

Baum, Seth D. Manipulating aggregate societal values to bias AI social choice ethics. AI and Ethics, forthcoming, DOI 10.1007/s43681-024-00495-6.

Download Preprint PDF • View in AI and Ethics • View in ReadCube

Image credit: unknown